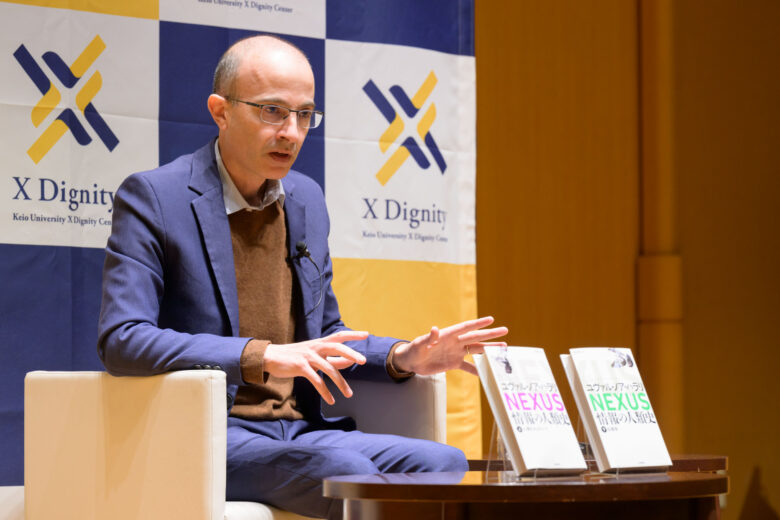

【Event Report】 Special Talk Event with Prof. Yuval Noah Harari (March 16, 2025)

On March 16, 2025, a special event titled “Human Dignity in the Age of AI: Looking Ahead to a New ‘NEXUS’” was held at Keio University’s Mita Campus with Prof. Yuval Noah Harari, historian and author of “Sapiens: A Brief History of Humankind”. Approximately 200 people, including students of Keio, and faculty members, gathered at the venue for an enthusiastic discussion.

【Outline】

Title : Human Dignity in the Age of AI: Looking Ahead to a New “NEXUS”

Hosted by: Keio University X Dignity Center, Kawade Shobo Shinsha

Venue: Keio University Mita Campus North Hall

Date: Sunday, March 16, 2025 10:45~12:05

【Program】

10:45-10:50 Opening remarks

Tatsuhiko Yamamoto, Professor, Keio University Graduate School of Law / Co-representative, X Dignity Center

10:50-11:25 Talk Session

Yuval Noah Harari, Historian / Philosopher

Kohei Ito, President, Keio University

11:25-12:05 Q&A with students

■Opening Remarks

Tatsuhiko Yamamoto/ Professor of Law school, Keio University, and Co-Director of X Dignity Center

In the current information network age, with AI as a new “member,” we are constantly exposed to stimulating, inflammatory, false, and hateful information and expressions through algorithms designed to cleverly attract our “attention.” In this situation, at least to me, it does not feel like advanced information technology, including AI, is truly improving our “dignity.” Even if we look at the world in global terms, it seems to me that the “story” of liberal democracy, which has tried to guarantee that we can live as ourselves, is under heavy attack by the forces of several companies and superpowers that use algorithms as weapons. If this trend accelerates, we may eventually arrive at an inhuman world where we are used by AI, which was supposed to be a tool, and our human dignity is disregarded.

Yukichi Fukuzawa, the founder of Keio University, valued “independence and self-respect,” or the dignity of oneself and others. Fukuzawa respected the development of science, such as physics and information technology, but while seeing them as the basis of learning, he did not become engrossed in science and technology, and aimed to actively construct a civilization that emphasized a balance between truth and order. Last year, Keio University established the X (cross) Dignity Center as an interdisciplinary research institute that fundamentally questions the meaning of dignity in the AI era, where humans and machines cross paths in various fields. Today, we have invited Professor Yuval Noah Harari, a historian who has just published a new book entitled Nexus: A Brief History of Information Networks from the Stone Age to AI, which depicts human history from the perspective of “information networks.” Together with President Kohei Ito, who is also a physicist specializing in quantum computing, we would like to explore the possibilities of a new “nexus” (connection) that takes human dignity into consideration.

■ Talk Session

Yuval Noah Harari / Historian, Philosopher

Kohei Ito / President of Keio University

Premature AI development

Ito.

Four years ago, when I became president of Keio University, a shelf was set up in the university library to display must-read books recommended by the president. Your (Prof. Harari’s) 21 Lessons for the 21st Century was placed in the middle of that shelf. In it, you describe three major global threats.

The first is nuclear war, the second is the uncontrolled progress of biotechnology, and the third is IT, information networks, and AI. But in this edition of Nexus: A Brief History of Information Networks from the Stone Age to AI, your foremost focus is on IT, information networks, and AI. What explains this shift in emphasis?

Dr. Harari.

When comparing AI and biotechnology, what is clear is that AI is much faster in its progress. Biotechnology can also dramatically change the world, but its progress takes much longer; AI advances are millions of times faster than biological advances.

Clearly, nuclear war has no advantage when compared to AI. AI, on the other hand, constitutes a much more complicated challenge, because it also has a huge positive potential. That fact makes it difficult to understand what the threats are.

AI is alien, not evil; AI is superhumanly intelligent in many ways, but it is not human; AI will surpass human intelligence, but it is inherently alien. This makes it difficult to predict how AI will develop and what the consequences will be.

Another thing we can say is that AI is an agent, not a tool. We can decide whether or not to use a tool. AI is the first technology that can make decisions on its own, without our help or intervention. It can decide for itself what to bomb. It can also invent new weapons and new military strategies. At the same time, it can invent new medicines and cures for cancer. Thus, AI has immense potential.

I have chosen to focus on information technology because it is the greatest challenge we face today. For thousands of years, we have dominated the planet, and nothing on earth has been able to compete with us, either in intelligence or in our ability to invent. But perhaps within a few years, we will have an AI that surpasses us and invents everything from pharmaceuticals to weapons.

Ito.

You spoke of this happening within a few years, but already in Homo Deus, published in 2015, you sounded a warning against this advancement of information technology. How have things changed since then?

Dr. Harari.

When I wrote Homo Deus ten years ago, I thought that this might happen hundreds of years from now, that it would be a kind of philosophical speculation and would have little direct impact on our lives. But now, when I talk to people working on AI development at companies in the US and China, they tell me that they can have AGI (Artificial General Intelligence) within five years—or even just one. In other words, the rate of development is speeding up dramatically.

In addition, discussions about regulation and agreement on AGI are now extremely difficult. Since the last US election, I feel the possibility of a global agreement on how to manage AI development risks has evaporated. When I talk to people from leading AI developers, companies like Open AI, Microsoft, Tencent, and Baidu, I ask them two questions. One is, “Why such a rush to move forward?”

I understand that AI has great advantages, but on the other hand, it also has risks that bring to mind the extinction of the human race. Humans are very adaptable creatures, but we need more time for a considered response.

Some people say, “We want to slow down, but we can’t. Because if we slow down, our competitors will win and the most ruthless will rule the world. We cannot trust others, so we must go faster.”

The other question is, “Can you trust the super-intelligent AI you are developing?” And they answer, “Yes.” These are the same people who say we can’t trust other humans, but we can trust AI.

For thousands of years, humans have learned to trust each other. The global trade network connects eight billion people on the planet. In many cases, our food, clothing, and medicine are made by someone on the other side of the globe. There is trust. But we have no experience with AI yet. We do not know what it will lead to. We have no idea what will happen when super-intelligent AIs connect with each other and with millions of humans. We should be more afraid. And more importantly, how do we restore trust among humans before we develop super-intelligent AI?

Role of elites and what is required of educational institutions

Ito.

How do you see the division between the so-called elite and the non-elite?

Dr. Harari.

Like with many divisions, there is a misunderstanding of this term. Billionaires like Elon Musk and Donald Trump claim they are the opposite of elite. The richest people in the world who control the U.S. government say they are not elites. In other words, “elite” is a negative term often attached to political rivals. Every group has elites. There are always people who are more talented, more powerful, more influential. This is the nature of human society. The problem is not the existence of elites. The problem is that the most influential people in the group use power only for their own benefit. Those who have more influence and power should use that power for the good of society.

A society without elites is an illusion. The greatest experiment in creating a completely equal society without elites was communism, and we know how that turned out. This is not what we should be striving for. What is required of all universities and research institutions is not the elimination of elites or the breakdown of barriers between elites and non- elites, but the production of elites who will use power for the benefit of society and its people.

Ito.

What should educational institutions like ours be like? What is the role of education in maintaining human intellectual capacity?

Dr. Harari.

In the past, educational institutions were primarily responsible for providing information to the people. There was only one library in town and the number of books was limited. Now it is quite the opposite. We are bombarded with vast amounts of information. What we need to understand is that much of the information is untrue, and only a fraction of it is true. Most information is junk, fiction, fantasy, lies, and propaganda. Truth is expensive, but fiction is cheap. If you are going to write the truth about something, you need to spend time researching, analyzing, and fact-checking. It takes time, effort, and money. Fiction, on the other hand, is very cheap. All you have to do is write whatever comes to mind.

Also, reality is complex. Fiction can be as simple as you want it to be, because you can just create it. And finally, truth is often painful. Not always, but there are aspects of reality that we want to turn away from. If everything in life was pleasant and comfortable, we wouldn’t need a therapist. Truth is sometimes painful. So, in the competition between expensive, complicated, painful truth and cheap, simple, attractive fiction, fiction wins. And the world is flooded with an enormous amount of fiction.

The role of educational institutions and the press is not only to provide people with more information. I think we already have too much information. Their role is to find the rare gems of truth in the sea of information and provide a way to distinguish them from the rest. As a historian, what I teach my students is not facts about the past. That can be found on the internet. What they need to learn in history is how to distinguish between reliable and unreliable historical sources.

How do I know if I can trust this TikTok video or not? When you find a document from a thousand years ago, how do you know if you should believe what is written in it? People lied even a thousand years ago. The important thing is not to get more information. It is how to tell the difference between reliable and unreliable information. This is true in any field.

Build trust between humans

Ito.

Reading Nexus: A Brief History of Information Networks from the Stone Age to AI, I had mixed feelings about the reliability of information, but at the same time I was hopeful that it is not too late to establish trust. As Professor Yamamoto mentioned, a research center called the “X Dignity Center” has been established at Keio University to address this very issue. If you were to become a member of this center, what would you research?

Dr. Harari.

How to build trust between humans. I think that is the most urgent issue because what we see now in the world is the collapse of human trust. The biggest crisis in the world is not AI. It is the distrust between humans that makes the AI revolution so dangerous, though it is not too late to build enough trust among humans to bring AI under control.

Ito.

What approach would you take to address this challenge?

Dr. Harari.

That is why we need an interdisciplinary research center. We need insights from biology and psychology. We need to understand about genes. We can also learn a lot from how chimpanzees build trust. Of course, we also need insights from economics and computer science. Many of the lessons about building trust between humans may have worked ten or twenty years ago but are now outdated, because now we communicate using computers.

If you look at child mortality and disease rates, they are declining. Yet people are angry, frustrated, and unable to talk to each other. What has happened? New technologies have come along, and most communication is now mediated by non-human agents. That is not to say that we should throw away our smartphones and computers. What we need to do is rethink how we build trust between humans through the medium of technology.

Do what each of us can

Ito.

Your book is so readable. It’s very different from academic writing. Do you do this intentionally?

Dr. Harari.

Of course. I see my role as building bridges between academic communities. Often, professionals have difficulty conversing with experts in other fields and with people who do not have an academic background. Therefore, I have tried to take the latest theories, models, and findings in academia and explain them in a way that high school students, or anyone who is interested in the AI revolution, can understand. Even if I have never studied computers, can I understand in simple terms what the AI revolution and the history of information are about? This was the aim of writing the book.

Ito.

How do you feel that your effort is reaching those who are living in cyberspace?

Dr. Harari.

It is difficult to find out what impact the book you have written actually has on people. My husband and I started a company called Social Impact Company about ten years ago. We have twenty people working for us— I write the books and he handles the business side. We also have a social media presence so that we can reach more people with the ideas we put into the book. I don’t know if this is enough, but we want to do what we can and leave what we can’t do to those who can. No one can carry the entire world on their shoulders alone. It is still not too late if each of us does what we can.

Ito.

How do you see developments, especially since the start of the Trump administration?

Dr. Harari.

It’s been very worrying, because of the accelerating erosion of trust within society and between societies. We see that the international order established after the Second World War is now collapsing. Back then, the most important, new rule was that strong nations should not simply invade and conquer weaker ones. And this was in complete contrast to thousands of years of earlier history when, you know, it was common for a stronger country to conquer its weaker neighbors and establish a bigger country or empire. Up to the early 21st century, humanity enjoyed the most peaceful and prosperous era in its history, largely because this taboo was maintained.

In many parts of the world, such as the Middle East, Israel, there are conflicts. I know very well that we don’t have perfect conditions everywhere, but the situation was better than anything that existed before. You could see this most clearly in the budgets of governments.

For most of history, more than 50% of the budget of every king, shogun, or emperor was spent on the military. In the early 21st century, average government expenditure on the military went down to about 6~7%—an amazing achievement—while about 10% went to healthcare. It was the first time in human history that governments all over the world spent more on healthcare than on the military.

Now, however, things are going in the opposite direction. As a result of Russia’s invasion of Ukraine, the U.S. defense budget has risen sharply, at the expense of health care and education. Trump’s idea of world order is that the weak are right to follow the strong, and if they do not follow the strong, the resulting conflicts are their own responsibility. Such logic sends humanity back to a state of constant warfare. Not only will this result in reduced budgets for health care and education, but it will also make it impossible to regulate AI, because the losers in AI competition we will have to follow the winner all the way.

Ito.

But your book gives us hope. There is still hope. If people do the right things in the next few years, humanity will still be ok.

■Q&A with students

Student.

As AI has evolved, it has become a network, but humans are increasingly fragmented among themselves. You mentioned that Homo sapiens has a special ability to maintain groups, and that widely shared narratives (intersubjective reality) have played an important role in this. What role do you think it will play in the future?

Dr. Harari.

Our great ability as homo sapiens is to build very large cooperative relationships of thousands, millions, and billions of people through shared narratives. Perhaps the most successful example of this is money. Money is the only story everyone believes in.

But now this is being threatened by cryptocurrencies. This is because more and more people are saying, “I don’t want dollars, I want cryptocurrencies.” This is a sign that cannot be ignored. While dollars are created by human institutions like banks, cryptocurrencies like Bitcoin and Ethereum are post-human currencies created by algorithms. In other words, those who say they don’t trust dollars but do trust bitcoin are saying they trust algorithms more than humans.

Elon Musk and Trump have fired tens of thousands of human bureaucrats, but they are not against bureaucracy. They are simply trying to replace the human bureaucracy with an algorithmic digital bureaucracy.

Before social media, every story out there came from a human mind, and it was human editors who decided which stories would dominate the conversation. An editor at a newspaper or TV station would look through all the stories published that day and decide which ones were the most important. But now this is done by algorithms. Stories are being created and spread by non-human entities.

We also need to distinguish between freedom of expression and freedom of information. Freedom of expression is a human right and should be protected; people should have the right to express their opinions without fear of being punished, whether on TikTok, Facebook, or anywhere else, except in extreme circumstances.

Freedom of information, on the other hand, is something entirely different: a new right given to AI, algorithms, and bots. Many of the social media giants are intentionally confusing these two rights. The problem in social media is not that humans lie. Humans have been lying for thousands of years. The problem lies with algorithms that intentionally spread lies and hatred. This should be stopped and punished.

If I am a YouTube user and I make a fake video, I should not necessarily be punished unless it causes harm. However, if I am a company and I intentionally recommend and play hateful conspiracy theories to millions of people and make money from them, then I should be held accountable. In this case, it is not a question of freedom of expression because the theories are spread by algorithms, and algorithms have no rights. Social media companies, just like newspapers and TV stations, should make sure that the content they promote it is the truth and not a hateful lie.

Student.

In the past, the wealth possessed by nations was tangible wealth, such as natural resources. Today, however, wealth is being transformed into intangible assets using data and technology. Cyber warfare using AI is already taking place, but that has not ended the tragedy of bombings and the killing of people, as in the Russian-Ukrainian war. No matter how highly intelligent AI develops in the future, will the atrocities of war remain as long as there are humans with feelings of hatred?

Dr. Harari.

The development of information technology does not abolish the physical world. All human-created information, whether it comes with constitutional law, religion, or money, does not replace or abolish the physical world. Humans still have bodies, they still need to eat, they still need clean water.

And war, after all, is still about killing people. But the way it is done is changing. Many people thought that the actual shooting would be done by AI, but it is still done by humans; what AI is doing is deciding where to target, and that has happened in the last couple of years. Previously, the job of identifying targets was done by human officers. They would spend days gathering all the information and deciding where to bomb. But now it is done in seconds by AI, which looks through a huge amount of information that humans cannot analyze, finds patterns, and within seconds says, “This is an enemy command post, bomb it.”

This is done using statistical probabilities and very complex mathematical models. The big question is, can we believe the AI? AI may make mistakes. It might tell it to bomb a civilian building instead of a military one. One person says that after AI indicates a target, a human analyst will look over all the information to make sure it is correct, another says it takes no more than 30 seconds to check. No one can really check AI. Looking toward the future, say, in 5 or 10 years, AI will be making even more important decisions in warfare. This is very dangerous.

Student.

In Homo Deus, you wrote that the development of the COVID-19 vaccine was a very significant advance, but there were also many anti-scientific ideas and conspiracy theories circulating at the time. If the same anti-scientific ideology boils over again, what measures can be taken?

Dr. Harari.

Anti-scientific ideas and conspiracy theories are not new. They have existed throughout history. Truth is complex; fiction is simple. The truth about pandemics is very complex. For most of our history, mankind did not know that viruses existed. We are only beginning to understand what viruses are, and there is great controversy in the scientific community. Viruses do not grow by eating something like bacteria do. Viruses are just pieces of genetic information. And the amazing ability of this information is to get into the cells of the human body and cause the cells to start making more copies of its code. It takes over the human body.

This is very strange. At the level of easily perceptible reality, this does not happen. That’s why it is very difficult to understand how a pandemic starts and spreads. It is much easier to believe in conspiracy theories. As a historian, I can tell you that these types of conspiracy theories are always wrong.

When the US invaded Iraq, they failed even though they were the most powerful country in the world and had all the military might. Ultimately, Iran was the main beneficiary and the victor of the war. In other words, the U.S. had a plan, but it turned out quite differently. This is the reality. Conspiracy theories are usually based on the wishful thinking that the world is a very simple place, where a few people can plan to take over the world and then successfully carry out their plan. Reality is not so simple.

Student.

In the social media world we live in today, YouTube, X, and TikTok are widely prevalent, and there is a trend of finding fun and entertainment in them. How do you think fun and entertainment will change in the AI-driven world in the future?

Dr. Harari.

Throughout history, all entertainment, whether it be poetry, theater, television, or film, has come from the human imagination. Now we have something that is much cheaper and more efficient than humans and can produce things that are beyond the limits of human imagination. Human imagination is limited by our brain and biological limitations, but AI has none of those.

To give an example, there was a very famous game in 2016 between AlphaGo and the world Go champion, Lee Sedol. The remarkable thing about this game was not only that AI defeated the world champion, but also that humans have been playing Go in East Asia for thousands of years and until now no one had come up with a game like AlphaGo. Then AlphaGo appeared. The same thing will happen in music, painting, theater, and television, and it is already happening.

A lot of people are now working in AI-based video production, and this marks the beginning of it. Like any other field, the form of entertainment will completely change, but we cannot predict how it will change; if we could predict what AI is going to do, then it would not be AI.

Students.

As people’s values become more diverse due to the convenience of the Internet, it is easy to imagine a situation where correct information is no longer available and the majority of people do not have access to correct information. In such a situation, how can people reach a consensus?

Dr. Harari.

I still don’t know how we can reach consensus among humans in an age when human communication is being manipulated by new information technologies. One of the most important things in science is to admit ignorance. Before science, humans used to say that they knew everything. Everything is written in the scriptures. No need of new research.

The scientific revolution was about people coming and saying, “We really want to know this, but we don’t know. Therefore, we need to do research.” It is the same today. If I were asked what I would choose as the most important research, I would say how to build trust between human beings in an age where we communicate through new technologies. What we have learned about building trust for thousands of years is no longer valid. And we need more researchers to focus on this topic.

Student.

If the AI that will emerge in the future will work as an agent, how will AI lead research in contrast to the research that has been led by humans?

Dr. Harari.

AI is revolutionizing scientific research. Both the Nobel Prize in Physics and Chemistry were awarded to the field of AI. I was particularly surprised that the Nobel Prize in Chemistry was awarded to the developers of AlphaFold. Their original specialty is not chemistry, although they needed knowledge of chemistry to develop AlphaFold. But their discovery changed chemistry, changed biology, and changed medicine by solving a huge scientific puzzle: predicting how proteins fold. What we can see from this is not only that AI will be better than humans in more fields, but also that AI can break down barriers in disciplines.

Human capabilities are limited. We don’t have time to study physics, economics, chemistry, history, and all of them in depth. AI, however, has no such limitations. Therefore, in ten years’ time we may see the emergence of AI that can deeply understand each academic field and make discoveries at a level that humans cannot, by combining various data and models.

■Message from Dr. Harari

There is so much to think about and so much research to be done. The people who develop AI talk about the positive possibilities of AI, but to balance the benefits and risks, we need philosophers, historians, and critics. That is why I focus on this risk when I give speeches. But there are also enormous positive possibilities. I don’t want to stop AI development, I just want to make sure it’s done safely. The key here is to build trust among humans. Trust is the very foundation of life, in the sense that we cannot live even for a minute without being able to trust others and what is outside of us. We cannot breathe if we cannot trust the air around us. There are great problems in the world, but ultimately, the foundation of life is trust.

Photo by Takeshi Kishi

Written by Kaori Suzuki